Stakeholder Interview

Early conversations with Product and Sales clarified that the WebSDK demo was not just a UX surface — it was a business-critical evaluation tool.

For most prospects, this demo was:

-

Their first hands-on interaction with Anyline

-

Their proof of technical feasibility

-

And their basis for deciding whether to move forward

Stakeholders emphasized that if users couldn’t reach a successful scan quickly and confidently, they would not trust the product enough to request a trial — regardless of how powerful the OCR was behind the scenes.

This reframed the design challenge from

“make the UX better”

to

“turn evaluation into a low-risk, high-confidence experience.”

That shift became the foundation for how success was defined, how research was interpreted, and how design decisions were made.

Literature Review

Reviewed

-

Anyline Cloud API documentation

-

Brand guidelines

-

Scanbooks and sample images

This revealed

-

Strong technical flexibility

-

Need for visual clarity and consistent feedback

-

Importance of responsive, accessible UI

Gaps and Opportunities

-

Limited OCR-specific UX patterns

-

Accessibility vs visual identity trade-offs

-

AI-driven UX promising but not mature for immediate use

Competitive Research

While direct competitors were not benchmarked, inspiration was drawn from similar functioning platforms like I Love PDF, known for:

-

Minimal steps

-

Clear upload > process > result flow

-

Fast time-to-value

This reinforced the need for a task-first, friction-light experience.

User Interviews & Behavioral Patterns

Here I conducted moderated user interviews where participants were first given brief context about the product and then asked to explore the experience.

Instead of testing for task success alone, the focus was on how users navigated the flow, where they hesitated, and what influenced their confidence while scanning.

During the sessions, users were prompted to:

-

walk through the scanning flow in their own words

-

highlight moments of confusion or friction

-

explain what slowed them down or made them unsure

-

describe what would make the process feel faster or easier

-

interpret results and articulate whether they trusted the output

Behavioral Patterns Observed

Across sessions, a few consistent behaviors emerged:

-

Users hesitated early in the flow

Participants often paused at the beginning, unsure about which mode to choose or what would happen next. -

Scanning felt slower than it actually was.

-

Lack of feedback reduced trust during scanning

-

Navigation required unnecessary mental effort

-

Retrying felt costly

Usability Benchmark (SUS)

A baseline System Usability Scale (SUS) test with 6 users was run on the existing WebSDK demo to quantify how easy it felt to use.

-

SUS score(Avg) : 52

-

Industry benchmark for acceptable usability: ~68

A score of 52 indicates below-average usability and confirms that users were experiencing significant friction while interacting with the demo.

Takeaway

The SUS benchmark validated that the existing demo placed unnecessary mental effort on users during a critical evaluation moment. Improving usability wasn’t just about polish—it was essential to building trust, clarity, and engagement.

Heuristic Evaluation

Visibility of System Status

There’s no clear indication of where users are in the process or how many steps remain, which can lead to confusion and uncertainty.

Match Between System and the Real World

Highlight Anyline's advanced OCR capabilities through a seamless demo that drives trial sign-ups and client confidence.

User Control and Freedom

Users aren’t provided with a simple way to rescan or upload a different document, limiting flexibility and control.

Error Prevention

Users can mistakenly upload unsupported formats due to unclear instructions and missing file-type indicators.

Recognition Rather Than Recall

There are no contextual cues for technical terms, and the uploaded image isn’t shown on the result screen, forcing users to rely on memory.

Flexibility and Efficiency of Use

The system lacks shortcuts or alternate paths for users who want to skip certain steps or quickly rescan documents.

Aesthetic and Minimalist Design

The interface, especially on type selection and result screens, feels cluttered and could benefit from visual simplification.

Help and Documentation

The system lacks in-product guidance such as tooltips, onboarding, or contextual help, making it harder for new users to navigate.

Severity ranged from medium to high across most heuristics.

03

From Synthesis to Strategy

Key Behavioral Themes

Interview notes, usability observations, SUS findings, and heuristic issues were clustered using affinity mapping to identify recurring behavioral patterns across users.

This step focused purely on what was observed, without jumping to conclusions.

Key Challenges Identified

Users took too long to reach their first meaningful scan result due to early hesitation, unnecessary steps, and lack of visible progress—weakening first impressions and increasing abandonment.

+40%

The experience made retries and experimentation feel costly, discouraging users from testing variations and diff inputs/outputs during evaluation.

Unclear system feedback, weak input–output connection, and inconsistent presentation caused users to question scan accuracy and reliability.

Completing a basic scan required more thinking and interaction than users expected, creating friction disproportionate to the task’s simplicity.

Visual clutter, inconsistent behavior across devices, and lack of refined feedback reduced perceived product maturity during a critical business evaluation moment.

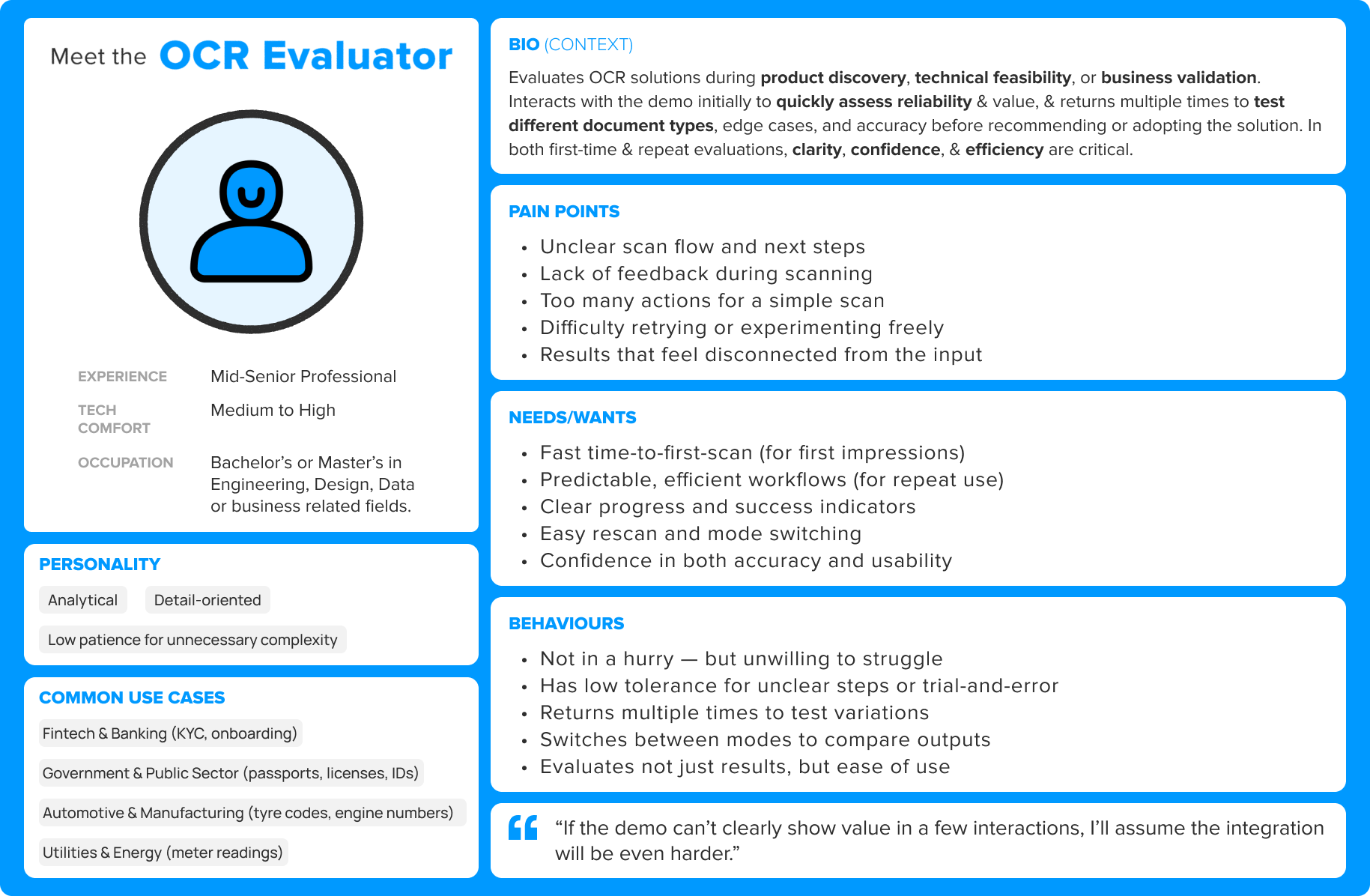

Persona (Research Synthesis)

Insights were distilled into one primary persona:

The OCR Evaluator — a business/technical stakeholder assessing OCR solutions for feasibility and trust.

Insights to Strategy

Strategy : Turn the WebSDK demo into a confidence-building evaluation experience.

User research showed that early demo moments were dominated by hesitation, unclear feedback, and high cognitive effort.

So the experience was designed around 3 strategic principles :

-

Fast success builds trust

-

Uncertainty kills engagement

-

Exploration should feel safe

04

Design Execution

User Flow Evolution (Current → Optimized)

Design Decisions

With the strategic direction clearly defined, design execution focused on translating intent into a fast, confident, and low-effort scanning experience. Visual decisions prioritized clarity, system feedback, and perceived product maturity over feature density.

A brief moodboard helped align the interface with a calm, professional, and trustworthy visual tone,

Strategy 1

Accelerate Time-to-First-Value

Addresses: Slow Time-to-First-Value

Design Decisions

-

Simplified the journey into a clear 3-step flow

-

Enabled instant upload → auto-scan

-

Reduced upfront decisions

-

Eliminated explicit “submit” or “start scan” actions

-

Added sample/demo images for users without files

Strategy 2

Make System State Always Visible

Addresses: Low Confidence During Evaluation

Design Decisions

-

Real-time progress indicators

-

Clear loading, success, and error states

-

Contextual microcopy explaining what’s happening

Strategy 3

Reduce Cognitive Effort

Addresses: Too Much Effort for a Simple Scan

Design Decisions

-

Removed redundant screens and unnecessary confirmations

-

Removing silent moments

-

Simplified mode selection and grouping related options

- Clarified primary and secondary actions through visual hierarchy

-

Replaced technical jargon with human-readable language

Strategy 4

Safe Retry & Exploration

Addresses: Limited Room for Exploration

Design Decisions

-

Enabled easy rescan without restarting the flow

-

Allowed mode switching from within the results view

Strategy 5

Visual Consistency & Accessibility

Addresses: Product Felt Less Polished

Design Decisions

-

Consistent layouts across screen sizes

-

Reduced visual clutter to improve focus

-

WCAG-compliant contrast and typography

-

Used subtle animations to reinforce system feedback

The System in Action

These decisions collectively shaped the final experience—one that feels fast, clear, forgiving, and trustworthy during product evaluation.

The following high-fidelity prototypes demonstrate how these decisions come together in a cohesive, end-to-end scanning experience.

Web - Prototype

Mobile - Prototype

System Usability Scale (SUS) Testing

Post-Redesign Outcome

-

SUS score improved from 52 → 74 (+22 points)

-

This shift moved the experience from “Poor” to “Excellent” usability

-

It aligned user perception with Anyline’s brand promise of speed, accuracy, and simplicity

What This Means?

SUS results validated the redesign’s effectiveness:

Users not only completed tasks faster but felt the system was clearer, easier, and more intuitive, a critical factor in demo-driven product adoption.

Handoff & Collaboration

Worked closely with developers to ensure smooth implementation.

Regular cross-functional reviews helped align visual fidelity, motion behavior, and technical feasibility.

The final build accurately reflected the design vision while maintaining performance and accessibility standards.

05

Outcomes & Learnings

Outcomes

The redesigned WebSDK demo delivered measurable improvements across usability, efficiency, and conversion:

-

40% fewer clicks per scan, driven by a simplified 3-step flow.

-

~15s faster Time-to-First-Scan, with even greater gains during repeat scans.

-

26% drop-off reduction.

-

SUS improved from 52 → 74.

-

Lead conversion increased from 6% → 10%.

-

WCAG 2.1 AAA compliant, ensuring accessibility across devices and users

-

Delivered on time with 100% client satisfaction

Key Learnings

-

Clarity matters as much as speed

Simplifying the flow reduced effort, while clear feedback and recovery reduced hesitation -

Accessibility isn’t optional

Designing for WCAG compliance improved clarity and usability for all users, not just edge cases. -

Prototypes accelerate buy-in

Letting stakeholders experience the flow firsthand reduced ambiguity and sped up approvals. -

Design efficiency = business efficiency

Simplifying workflows reduced user effort while directly improving engagement and conversion.

“The redesigned demo has completely elevated how we present our technology. It’s faster, smarter, and feels smooth."

— Lucie M., Project Manager, Anyline

That’s a Wrap

In the end, great UX made great tech shine — and Anyline’s demo now scans faster, feels smarter, and sells itself.